akshath raghav ravikiran

MS-ECE @ Purdue | AI-HW Architecture, Agentic-AI Systems

I’m passionate about designing solutions that intersect explainable learning algorithms, cloud infra, and HW-SW co‑optimized platforms.

Love to discuss and learn about AI Accelerator Microarch, ML Compilers and Data-Center Infrastructure.

Pursuing my MS-ECE with Prof. Mark Johnson at Purdue University. Looking for full-time roles post-September 2025.

Feel free to reach out to me at araviki[at]purdue[dot]edu.

background

- F’25: Graduated with B.Sc. In Computer Engineering! Completed the Scratchpad IP for the Ax01 Core. Led the Data Mine team to build an AI Platform for Dow’s Analytical Science division.

- Smr’25: Interned at BMW (Digital Campus Munich, Germany) in the FG-240 group, working on multiple projects focused on integrating AI Workflows into SDLC Offerings. You can find the projects here.

- S’25: Working on a few parameterizable IPs in the AI Hardware Team at SoCET. Completed my Capstone project (through ECE 49022), and won the Senior Design award for the BoilerNet system. Led the backend team under (Beck’s Hybrids)-sponsored project through the Data Mine. Funded by HII to work on a custom FFT-accelerator ASIC.

- F’24: Joined the Purdue SoCET Team as a TA. Joined the I-GUIDE Team as a Research Assistant (TDM HDR Fellowship), where we worked on scaling a HPC workflow for distributed inference on Apache Spark (+Sedona) through GCP (accepted at I-GUIDE Forum ‘25).

- Smr’24: Joined the Purdue SoCET group, in the Digital Design team. Over the summer, I added the Zicond Extension to the RISCV core for the AFTx07 tape-out. Implemented the TinySpeech family of speech recognition models at VLSI System Design. I also wrote an ANSI-C based inference engine which runs out-of-box in 8bit precision for embedded inference.

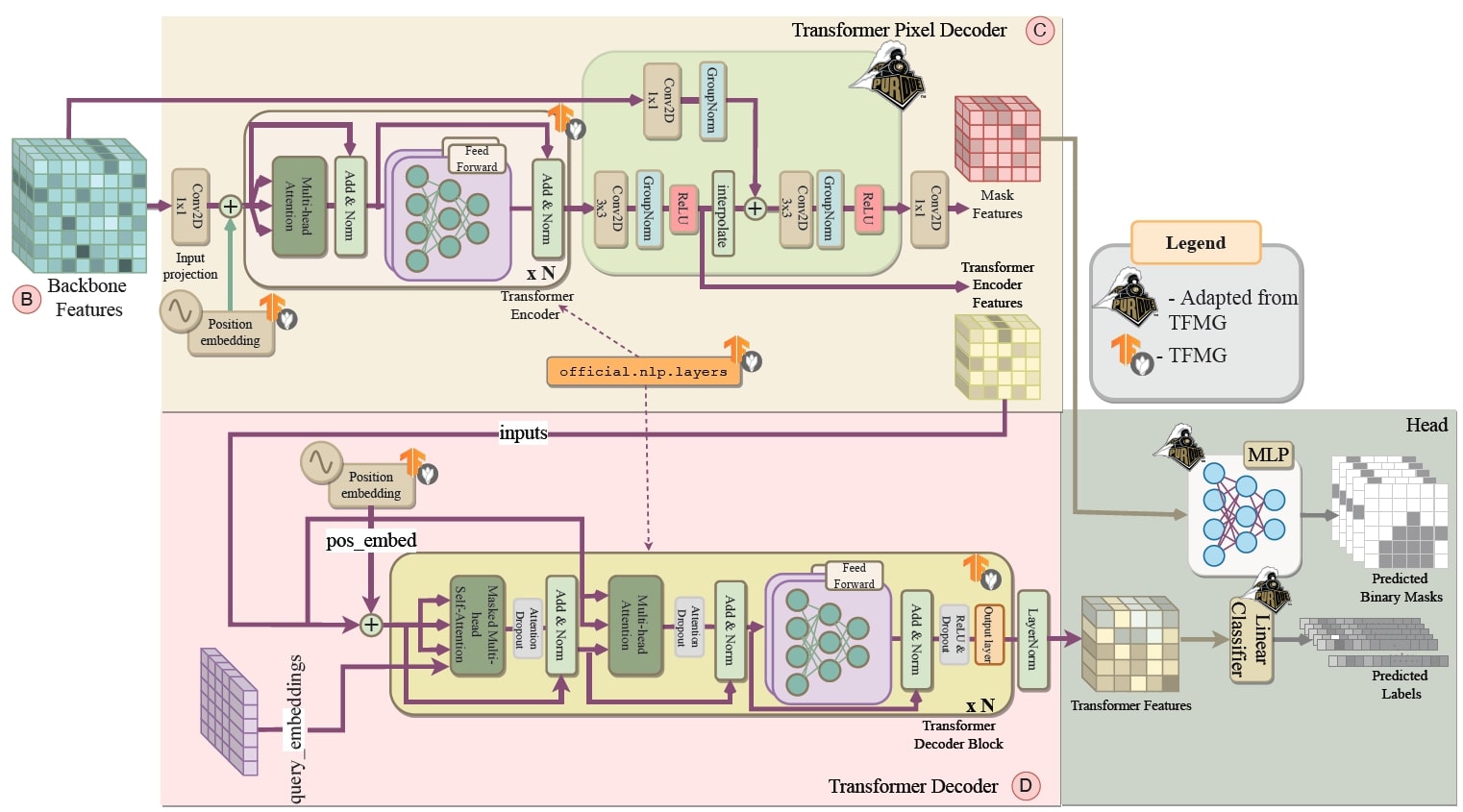

- F’23 - S’24: Worked at the Duality Lab, where we re-engineered the MaskFormer segmentation model (funded by Google!) from the PyTorch-based artifact to TensorFlow for publishing to the TF Model Garden. You can find our paper here and code here. I also generated figures for the PeaTMOSS paper (accepted at MSR’24).

- S’24: Led a project at the CVES group @ Purdue ECE, where our goal was to define and evaluate reproducibility within AI/ML projects. I wrote the codebase for building our pipeline and statistically defined the importance of parameters. I was involved in MultiModal (LM) understanding projects at the e-lab. I’ve built eugenie & grammarflow.

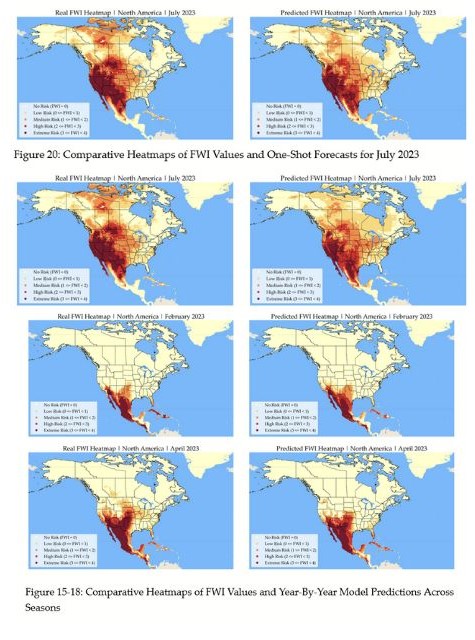

- S’23 - Smr’23: Interned at Ambee, where I deployed a worldwide fire forecasting system (F3) into their API and wrote automated scripts for their environment-data focused data lakes (still in use). You can find the whitepaper here and access the API here.

- F’22 - S’23: Helped lead a project supervised by Prof. Yuan Wang (currently at Stanford) where we aimed to correlate lightning activity with wildfire spread. Wrote (big-)data-interfacing code for satellites across EUR/EUS/SAR, and was responsible for packing them to use within a ConvLSTM model from DeepCube’s short-term forecasting.

Find my reports here.

extras

In my free time, I enjoy photography, chess, reading manga, speed typing (130+ wpm), and whittling!

I can speak English, Hindi, Tamil, and Kannada. Currently, I’m learning German and ASL.

I enjoy Bowling, Golfing, Billiards and Chess. I follow the NBA (Warriors), IPL (RCB) and ATP Masters.

news

| Jul 31, 2025 | Joined BMW (Digital Campus Munich, Germany) as an intern in the FG-240 group! Working on integrating AI Agents into IDPs and company SDLC. |

|---|---|

| May 01, 2025 | Grateful to receive the “Senior Design” award for BoilerNet. |

| Aug 06, 2024 | Grateful to recieve the Purdue OUR Scholars and DUIRI Scholarships. Excited to be starting as a research assistant in the NSF-funded I-GUIDE team. |

| Apr 30, 2024 | Our team’s report, “A Partial Replication of MaskFormer in TensorFlow on TPUs for the TensorFlow Model Garden,” is now available on arXiv! Find the code here, and report here. |

| Apr 23, 2024 | Received the Outstanding Sophomore in VIP award for the work I did at the CVES group. Read about it here. |

latest posts

| Aug 06, 2024 | [TL;DR] Energy-Based Transferability Estimation |

|---|---|

| Mar 23, 2024 | Set up Llama.cpp on university compute clusters 🦙 |